I am using a new M1 Mac with macOS 12.0.1. I installed software that caused all sorts of problems (I won't mention the name). I suspected it might be problematic, so I used CCC to take a full backup first. Specifically, I used CCC6 which created a snapshot on my internal drive, and then cloned... Continue Reading →

Installation of Backintime on CentOS7

This guide steps you through the process of installing all the necessary CentOS packages, compiles necessary libraries, and installs Back-in-time on CentOS7

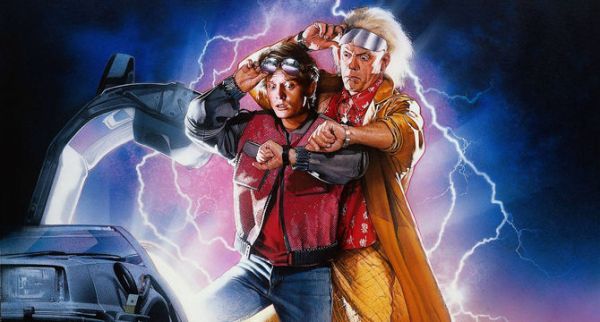

Everyone Loves an Old Fox, Right?

Everyone loves an old Fox, right? Well, actually no. Unlike the Michael J. variety, Mozilla Firefox has slipped into almost complete obscurity as a desktop browser. Depending on your source of data, Firefox's marketshare has slumped from a peak in 2009 of just over 30%, to a lowly 7.69% in August, 2016. Although, it has... Continue Reading →

Docear on macOS: Navigating the Apple Java Nightmare

So this blog post will be brief, as I suppress my disdain for Apple's attitude towards Java, now that they no longer need it to survive due to their App Store ecosystem. This blog post documents how I was able to get the Docear Mindmapping software working on OSX El Capitan (10.11). Sadly, many Java... Continue Reading →

Meeting in the Middle: How to Manage Change in Universities

This blog post is rather small, but is quite significant, to me at least. I've been sent the following paper. http://www.irrodl.org/index.php/irrodl/article/view/224/307 Leadership is a critical element in change management in universities and can be viewed alongside management as distinct but complementary elements in the change process (Ramsden, 1998). Leadership, in Ramsden’s view, is about movement and... Continue Reading →

The reusability paradox – WTF?

WTF? The reusability paradox. How can reusability be bad? When first presented with this concept last year, I must admit I really did struggle with it. As a techhie, every fibre of my being compels me to focus on reuse. Hence, the paradox. After some weeks of struggling with the reusability paradox, it did start... Continue Reading →

Moodle Activity Viewer – in the cloud?

So what is MAV? An introduction to MAV written in 2013 is available. Features have been added, but the core concept remains unchanged. In a nutshell, it allows you to visualise student click activity within your Moodle course site using a heat map, colouring links lighter or darker according to the number of times they... Continue Reading →

Being BAD at task management

I have co-authored a paper with a colleague, David Jones which was published at the ASCILITE2014 conference being held in Dunedin New Zealand. The paper was titled Breaking BAD to bridge the reality/rhetoric chasm. The reality/rhetoric chasm is best expressed through the following metaphor, in the words of Professor Mark Brown: E-learning's a bit like... Continue Reading →

How to Install Backintime Backup Software on CentOS/RHEL/Scientific Linux 7

Introduction Hopefully the title of this post is explanation enough. Backintime is a neat backup solution that mimics in some ways, the abilities of OSX' Timemachine backup system. At predetermined time intervals, Backintime will sweep configured directories on your computer, and only backup the differences since the last sweep. It results in an efficient use... Continue Reading →

Omnifocus2 – A tale of the disappearing deferred tasks

Sadly due to work commitments, I have neglected my blog over the past 2 years. I plan to reinvigorate things again, starting with this post that I wrote back in August 2014, but never actually pressed the 'Publish' button. I have recently switched from my existing Mac-based task management software Things, to a new product... Continue Reading →